Understanding the implications of short selling in the context of explainable AI.

Short selling, a common practice in financial markets, involves borrowing and selling securities with the expectation of buying them back at a lower price. This practice is often closely monitored for its impact on overall market sentiment and stock prices. In the domain of explainable AI (XAI), a similar dynamic exists, albeit focused on the potential for "short-selling" of valuable insights and the impact on model credibility. This aspect of XAI scrutinizes how effectively explained models resist misinterpretations, highlighting vulnerabilities and ensuring the transparency of AI decision-making.

The importance of this type of scrutiny stems from the desire for trust and reliability in AI-driven systems. Misinterpretations or vulnerabilities within an XAI model can lead to biased or inaccurate predictions. A failure to provide sound, clear explanations can damage the model's reputation and decrease reliance. Historical analyses of market sentiment surrounding short selling have demonstrated the power of skepticism. This echoes the need for thorough analysis and robust testing within XAI to ensure the soundness of explanations and prevent any issues that might undermine the model's credibility. This rigorous approach contributes to the overall ethical and responsible deployment of AI systems.

Read also:Bolly4u Tech Latest Gadgets Tech News

Let's now delve into specific areas of XAI model analysis, focusing on the aspects of model fragility and the challenges of maintaining trust and credibility as they relate to financial markets and other critical domains where XAI plays a prominent role.

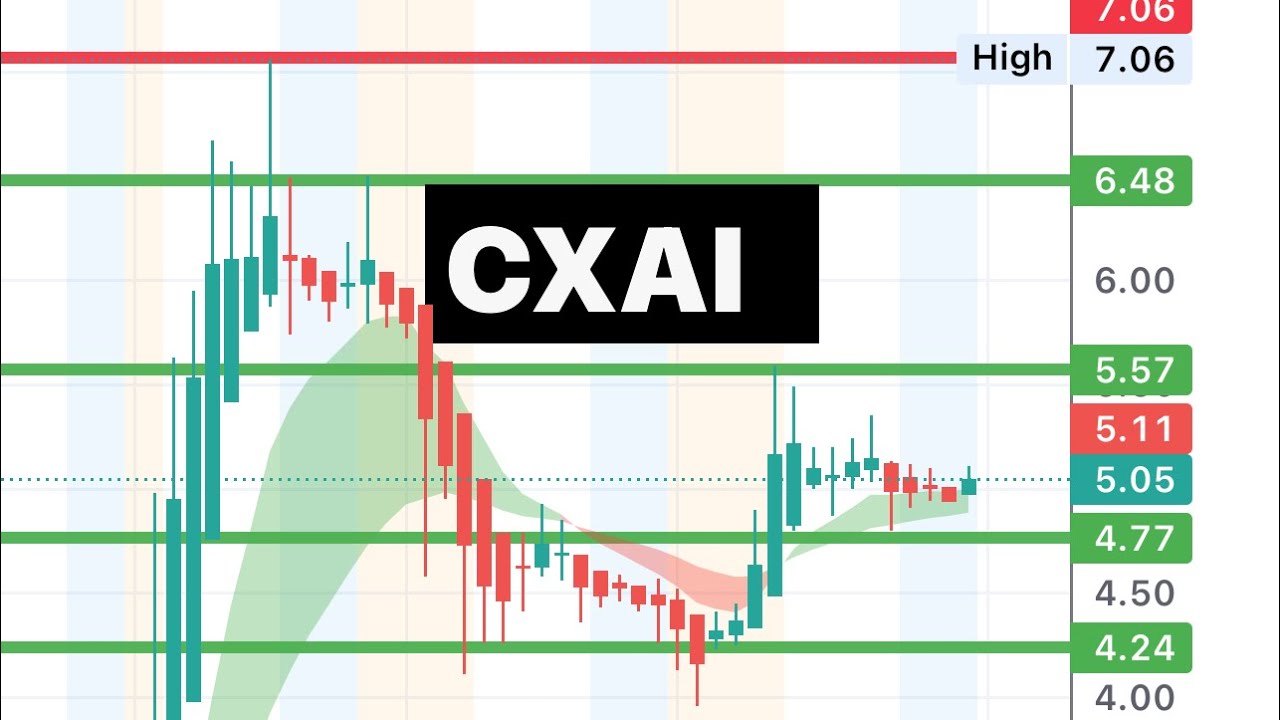

cxai short interest

Understanding "cxai short interest" requires analyzing the potential vulnerabilities within explainable AI (XAI) models. This scrutiny, akin to short selling, focuses on identified weaknesses and potential misinterpretations.

- Model fragility

- Bias detection

- Explanation reliability

- Credibility assessment

- Trust maintenance

- Vulnerability analysis

- Data integrity

These aspects, collectively, represent a comprehensive approach to evaluating XAI models. Model fragility, for example, examines how easily interpretations can be manipulated or misconstrued. Bias detection highlights the critical need to identify and mitigate any inherent biases within the model's explanations. Robustness of explanations is paramount. A model lacking sufficient credibility, like a financial instrument deemed unreliable, can lead to a diminished trust in its conclusions. Analysis of potential vulnerabilities necessitates careful investigation, analogous to short-selling, to determine model limitations and improve its stability. Data integrity underpins the entire process, emphasizing the importance of precise and accurate data used to generate explanations. These aspects must be scrutinized to ensure the reliability of XAI models.

1. Model Fragility

Model fragility, a crucial element in evaluating explainable AI (XAI) models, directly relates to the concept of "cxai short interest." Fragility represents the susceptibility of an XAI model to manipulation, misinterpretation, or the generation of misleading or unreliable explanations. Understanding this fragility is essential to assess the true value and trustworthiness of the model's outputs, mirroring the financial market scrutiny of short selling.

- Interpretational Vulnerability

XAI models, aiming for transparency, can be vulnerable to exploitation through manipulations of the input data or even targeted attacks. This vulnerability arises when the model's explanation mechanism is susceptible to manipulation, leading to biased or incorrect justifications. For example, a model might be overly sensitive to certain types of input data, making it prone to producing inaccurate or inconsistent explanations in response to specific or adversarial examples. This susceptibility to misinterpretation directly parallels the concept of short selling, where an understanding of inherent vulnerabilities can lead to a perceived advantage in the market.

- Bias Amplification

The fragility of an XAI model can amplify existing biases within the data it processes. If the underlying data contains biases, the model's explanations might reflect and potentially exacerbate these biases. The model might, for instance, provide justifications that are unduly influenced by demographic traits. This is akin to short selling strategies potentially exploiting data biases that are not transparently accounted for in an XAI model.

Read also:

- Discover Mala49 Latest News Trends

- Explanation Inconsistency

Fragile models often display inconsistencies in their generated explanations, potentially offering different justifications for the same prediction. This inconsistency undercuts the model's credibility, much like a financial instrument with volatile and unpredictable performance. This inconsistency makes it difficult to ascertain the reliability of the model's output and highlights potential vulnerabilities exploited by those seeking to "short" the model's trustworthiness.

- Sensitivity to Data Perturbations

Models demonstrating sensitivity to minor changes in input data, exhibiting a significant deviation in their outputs or explanations in response to these changes, raise concerns about fragility. Such models may not reliably provide consistent explanations for similar tasks, creating uncertainty around the model's robustness. This correlates to the financial market phenomenon of short interest; a fluctuating market due to minor external perturbations would concern investors.

In conclusion, the fragility of XAI models, including vulnerabilities in their interpretations, potential bias amplification, inconsistent explanations, and sensitivity to data perturbations, directly relates to "cxai short interest." Analysis of these facets illuminates the model's reliability and its susceptibility to scrutiny akin to short selling practices in financial markets. This understanding is crucial to establish trust and validate XAI models' accuracy in various applications.

2. Bias Detection

Bias detection within explainable AI (XAI) models is critical to understanding "cxai short interest." Hidden biases in the data used to train these models can lead to inaccurate and unfair outcomes. This, in turn, undermines the model's trustworthiness and raises concerns about its suitability for various applications, especially where fairness and equitable decision-making are paramount. The identification and mitigation of biases are essential to ensure responsible and reliable AI deployment.

- Data Bias Manifestation

Data used to train XAI models often reflects existing societal biases. These biases can manifest in various ways, potentially leading to skewed or prejudiced predictions and explanations. For example, if a model is trained on historical data predominantly featuring one demographic, it might exhibit biases against other groups. This is analogous to "cxai short interest" in that a lack of representation within training data mirrors potential shortfalls in model accuracy and reliability, creating areas of potential weakness for scrutiny.

- Bias Amplification Through Explanations

The explanatory mechanisms of XAI models can inadvertently amplify biases embedded within the data. If an XAI model's explanations emphasize irrelevant factors (such as a demographic characteristic) as crucial for a particular prediction, it reinforces harmful biases. Identifying and rectifying these biased justifications is analogous to "cxai short interest" in that it exposes vulnerabilities and areas where the model's reliability can be challenged.

- Algorithmic Bias Identification

Bias is not solely limited to data; algorithms themselves can perpetuate biases. An algorithm designed to predict outcomes may favor certain groups over others or contain an implicit bias due to its design. This algorithmic bias can lead to systemic unfairness in XAI models' predictions and explanations, mirroring concerns in the "cxai short interest" concept by creating areas of potential model vulnerability.

- Impact on Decision-Making Trust

The presence of undetected bias in XAI models can compromise the trust placed in their decisions. If explanations highlight biased factors, users (or stakeholders) might question the fairness and legitimacy of the system's conclusions. The identification of bias becomes crucial, analogous to the "cxai short interest" concept, because it reveals potential vulnerabilities in the model's credibility. This affects the wide-ranging acceptability and successful use of the XAI models.

In summary, bias detection is integral to responsible XAI development. Identifying and mitigating biases in the data, algorithms, and explanations of XAI models is crucial to ensuring fairness and trust in their outputs, mirroring the need for scrutinizing financial instruments. By actively seeking and addressing potential biases, practitioners can enhance the trustworthiness and reliability of XAI models, reducing the concerns inherent in "cxai short interest."

3. Explanation reliability

Explanation reliability is a cornerstone of explainable AI (XAI). The trustworthiness of an XAI model hinges on the dependability of its explanations. A model providing unreliable explanationsones that are inaccurate, misleading, or fail to adequately justify outcomesis analogous to a financial instrument with questionable valuations. In the context of "cxai short interest," unreliable explanations are crucial vulnerabilities, posing risks to the model's credibility and potentially leading to its devaluation in the eyes of users or stakeholders. The inability to ascertain the reliability of explanations fuels skepticism, echoing the rationale behind short selling in financial markets.

Consider a scenario where an XAI model used in loan applications consistently produces explanations that favor certain demographics over others. If these explanations are not reliably accurate, their lack of transparency and fairness compromises the model's trustworthiness. Users or lenders might interpret the model as biased, potentially leading to mistrust and a decrease in model adoption or acceptance. This lack of reliability is directly analogous to "cxai short interest" the perception of a model's vulnerability to criticism and skepticism. In this case, the model's unreliable explanations become a significant weakness that investors (users) might "short." Conversely, if the explanations consistently provide accurate and unbiased justifications for decisions, it enhances the model's credibility. This aligns with financial markets where sound valuation models underpin trust in investment decisions. The reliability of an XAI model's explanations thus reflects its inherent value and trustworthiness, directly impacting how it is perceived and used.

The practical significance of understanding explanation reliability within XAI extends to diverse fields. In healthcare, unreliable explanations for diagnostic recommendations can lead to patient mistrust and potential medical errors. In finance, unreliable risk assessment explanations could result in suboptimal investment strategies. Accurate, transparent, and understandable explanations are essential to fostering confidence in XAI model outputs. Without this reliability, a model faces scrutiny similar to the financial market's "short interest." An understanding of the importance of explanation reliability is crucial not only for XAI model development but also for ensuring that these models are utilized responsibly and ethically across various sectors.

4. Credibility Assessment

Credibility assessment of explainable AI (XAI) models is directly pertinent to the concept of "cxai short interest." Assessing a model's credibility involves evaluating the trustworthiness and reliability of its explanations. This evaluation parallels the scrutiny applied to financial instruments where factors like transparency, consistent performance, and historical data inform investment decisions. A lack of credibility, much like the perceived vulnerability of a financial asset, can lead to decreased trust and potentially "short selling" of the model's value or usefulness. Thus, robust credibility assessments are vital to prevent potentially negative impacts on model adoption and efficacy.

- Transparency of Explanations

The clarity and transparency of explanations directly impact credibility. Models offering readily understandable justifications for their decisions enhance credibility. Conversely, opaque or overly complex explanations engender skepticism, aligning with the potential for short interest. For instance, a model that provides a detailed breakdown of how it arrived at a diagnosis, highlighting specific factors and data points, increases confidence in its output. In contrast, a model offering a vague or generic explanation raises doubts, much like an opaque financial report might deter investment.

- Consistency and Stability of Performance

Consistent performance across various inputs and scenarios builds credibility. Fluctuations or inconsistencies in outcomes, particularly in response to similar inputs, evoke doubt, mirroring concerns surrounding short selling in financial markets. A consistently accurate model earns trust, while a model whose predictions change erratically, much like a volatile stock, reduces its appeal and value, becoming a target for scrutiny.

- Historical Performance and Data Validation

Models with a track record of accurate predictions, validated against known data sets and historical benchmarks, gain credibility. Robust validation and clear documentation of the model's training data and performance are key. A model's historical performancemuch like a company's financial historyis an important factor in evaluating its credibility. If a model consistently delivers unsatisfactory results or if its performance suffers significant variance, this might lead to similar concerns as a decline in the financial performance of a company, potentially prompting "short interest."

- Bias Detection and Mitigation Strategies

Models demonstrating a commitment to identifying and mitigating biases enhance credibility. Bias detection and mitigation mechanisms are essential to ensuring fairness and impartiality in the model's decision-making processes. An XAI model transparently acknowledging and addressing potential biases builds trust and credibility, much like a company disclosing risks associated with its operations. Conversely, a model that exhibits undisclosed or unmitigated bias creates skepticism akin to the "short interest" phenomenon.

In summary, credibility assessment within XAI models is crucial. Transparency, consistent performance, historical validation, and a demonstrable commitment to fairness form the bedrock of credibility. A model that lacks these attributes invites scrutiny, much like a financial instrument deemed questionable, thereby aligning with the concept of "cxai short interest." Robust credibility assessment safeguards the responsible deployment and effective utilization of XAI models, minimizing skepticism and fostering trust.

5. Trust Maintenance

Maintaining trust in explainable AI (XAI) models is paramount. A model's ability to inspire confidence directly correlates with its utility and adoption. Failures in maintaining trust can lead to a loss of confidence, resembling the concept of "cxai short interest" where perceived vulnerabilities lead to decreased value and potential skepticism. This exploration examines key aspects of trust maintenance within XAI systems, highlighting their relationship to the potential for skepticism.

- Transparency and Explainability

Transparent and readily understandable explanations are foundational to trust. Clear articulation of decision-making processes, avoiding jargon or ambiguity, fosters comprehension and reduces suspicion. Conversely, obscure or overly complex explanations breed skepticism and diminish trust, echoing the dynamics of "cxai short interest." Examples include clear visualizations of the reasoning behind predictions in medical diagnoses or financial risk assessments. A lack of transparency, akin to hidden risks in a financial instrument, reduces credibility and may invite scrutiny, which is similar to "cxai short interest."

- Fairness and Impartiality

The model must consistently exhibit impartiality and fairness in its decision-making. Biases in data or algorithms can introduce systematic unfairness, damaging trust. The detection and mitigation of biases are vital. For example, a loan approval model that disproportionately denies loans to particular demographics without clear justification generates suspicion, resembling "cxai short interest." Fairness and impartiality are crucial elements of trustworthiness within XAI.

- Robustness and Reliability

A model's consistent and reliable performance builds trust. Fluctuations in outputs or explanations without corresponding changes in inputs or underlying data evoke doubt. A robust and reliable model consistently produces accurate results, much like a dependable financial instrument. Conversely, unpredictable behavior or susceptibility to manipulation, analogous to a financial asset with hidden vulnerabilities, reduces trust. Such models invite the same scrutiny and distrust as the "cxai short interest" phenomenon.

- Accountability and Corrective Mechanisms

Clear lines of accountability and mechanisms for addressing errors or biases are essential for trust maintenance. If a model makes an error, acknowledgement and steps to correct the issue are crucial for regaining trust. A lack of accountability can engender the same distrust and scrutiny as the perceived vulnerabilities of "cxai short interest." An example would be a clear system for users to report errors or biases in an XAI system.

Ultimately, maintaining trust in XAI models is directly linked to avoiding the vulnerabilities of "cxai short interest." By prioritizing transparency, fairness, robustness, and accountability, organizations can build a strong foundation of trust, ensuring the widespread adoption and responsible deployment of XAI systems. The absence of these features can lead to the same skepticism and scrutiny observed in the "cxai short interest" context.

6. Vulnerability Analysis

Vulnerability analysis, a crucial component of evaluating explainable AI (XAI) models, directly relates to the concept of "cxai short interest." This analysis examines the potential weaknesses within an XAI system, identifying areas susceptible to manipulation, misinterpretation, or exploitation. This scrutiny, analogous to short selling strategies in financial markets, focuses on pinpointing model limitations and potential sources of error, analogous to areas of financial risk.

The importance of vulnerability analysis stems from the need to anticipate and mitigate potential harms associated with XAI deployment. A model exhibiting vulnerabilities risks generating inaccurate or biased outcomes, potentially leading to adverse consequences across various applications. Consider a medical diagnosis model; if the model exhibits a vulnerability to certain input data patterns, this could result in inaccurate diagnoses, potentially jeopardizing patient health. Similarly, in financial risk assessments, if the model is vulnerable to adversarial input data, it could lead to flawed predictions and potentially costly errors. Addressing these vulnerabilities is akin to mitigating financial risks; an analysis of potential vulnerabilities prevents potential loss. This anticipation of potential pitfalls is vital for the responsible and effective use of XAI models.

Furthermore, thorough vulnerability analysis is intrinsically linked to the reliability and trustworthiness of XAI models. Understanding the model's limitations allows for the development of strategies to mitigate their potential negative effects. This analysis helps stakeholders anticipate and address potential issues. By proactively identifying vulnerabilities, researchers and developers can build more resilient and reliable XAI models. This approach directly translates to a stronger, more dependable system, decreasing the potential for outcomes mirroring "cxai short interest" or instances of mistrust associated with vulnerabilities. In practical terms, this understanding guides the development of robust validation procedures and testing methodologies, ultimately strengthening the foundation of trust in these models.

7. Data Integrity

Data integrity is foundational to the trustworthiness and reliability of explainable AI (XAI) models. In the context of "cxai short interest," data integrity directly impacts the perceived vulnerabilities and potential for skepticism surrounding these models. Compromised data integrity can lead to inaccurate or misleading explanations, undermining the model's credibility and potentially mirroring the concerns associated with financial instruments subject to scrutiny.

- Accuracy and Completeness

Data accuracy and completeness are fundamental. Inaccurate or incomplete data directly affect the model's ability to learn and produce reliable explanations. If a model used in loan applications is trained on data with inaccuracies regarding applicant income or credit history, the model's predictions will be skewed. This inherent data deficiency mirrors the vulnerability of a financial instrument derived from inaccurate information; the model's predictions are questionable, leading to potential criticism and skepticism, much like "cxai short interest."

- Consistency and Uniformity

Data consistency and uniformity are crucial for model training. Inconsistencies within datasets, or inconsistencies in formatting, can lead to unreliable results. A model used for identifying fraud in financial transactions must have consistent data formatting for dates, times, and transaction amounts. Inconsistent data might generate misleading conclusions, mirroring the potential for error and causing users to view the model with suspicion, which relates to the concept of "cxai short interest."

- Data Source Reliability

The source of the data used to train an XAI model significantly affects its trustworthiness. Using data from a flawed or unreliable source introduces biases or inconsistencies that negatively impact model accuracy. A model relying on data from an untrustworthy or biased source might produce skewed explanations and predictions. This reliability concern parallels situations where financial data sources are suspect, causing the model and its performance to be viewed with skepticism, aligning with the concept of "cxai short interest."

- Data Validation and Cleansing

Rigorous data validation and cleansing procedures are necessary for maintaining data integrity. Validation involves checking data accuracy, completeness, and consistency, while cleansing addresses errors and inconsistencies. A model trained on data lacking robust validation and cleansing procedures could generate erroneous results. This situation resembles the risk of financial instruments based on inaccurate data; the lack of validation can lead to questions about the model's reliability and triggers the concerns surrounding "cxai short interest." Proper validation enhances the model's credibility, reducing potential skepticism.

In summary, data integrity, including accuracy, consistency, source reliability, and thorough validation, is essential to maintaining the trustworthiness of XAI models. Without data integrity, models become vulnerable to producing inaccurate or biased results, fostering mistrust and skepticism, which directly aligns with the concept of "cxai short interest." A robust dataset is vital for an XAI model to be viewed as reliable and trustworthy, enabling effective use and widespread adoption.

Frequently Asked Questions about "cxai Short Interest"

This section addresses common inquiries regarding "cxai short interest," a term referring to the scrutiny and evaluation of vulnerabilities within explainable AI (XAI) models. The questions and answers aim to clarify concepts and provide context for the potential challenges in deploying XAI systems.

Question 1: What does "cxai short interest" actually mean?

The term "cxai short interest" reflects the analytical process of identifying potential weaknesses and vulnerabilities in explainable AI (XAI) models. It's analogous to short selling in financial markets, where an investor anticipates a decline in an asset's value. Similarly, "cxai short interest" examines potential flaws in XAI models, such as biases, inconsistencies, or susceptibility to manipulation. This scrutiny helps evaluate the model's overall trustworthiness and its potential risks.

Question 2: Why is "cxai short interest" important?

Understanding "cxai short interest" is crucial for responsible XAI deployment. Identifying potential model vulnerabilities helps ensure the model's accuracy and reliability, mitigating the risk of producing biased or inaccurate outcomes. This proactive approach safeguards against adverse consequences, particularly in critical applications such as healthcare or finance, where model accuracy directly impacts decision-making.

Question 3: How does "cxai short interest" relate to bias in XAI models?

Bias in XAI models is a key aspect of "cxai short interest." This scrutiny involves investigating whether the model's explanations or predictions exhibit bias originating from inherent data biases or algorithmic design flaws. Understanding and mitigating bias within models is essential for ensuring fair and equitable outcomes, which directly addresses the core concerns surrounding "cxai short interest."

Question 4: What are some examples of potential vulnerabilities within an XAI model?

Potential vulnerabilities encompass various aspects, including the model's susceptibility to manipulation, inconsistencies in explanations, sensitivity to data variations, or the presence of undetected biases. For example, a model might produce different explanations for similar predictions, or its outputs could be unduly influenced by particular demographic characteristics within the data. These vulnerabilities are the focus of "cxai short interest" analysis.

Question 5: How can organizations mitigate the concerns associated with "cxai short interest"?

Organizations can mitigate concerns by implementing rigorous validation and testing procedures, conducting thorough vulnerability analyses, and developing models that ensure transparency and accountability in decision-making processes. These proactive steps build trust, address potential biases, and ultimately support the responsible development and deployment of XAI systems. A strong focus on data integrity, addressing fairness concerns, and maintaining consistency throughout the model's lifecycle are vital in this context.

Understanding "cxai short interest" is vital for responsible AI development and deployment. By acknowledging potential vulnerabilities and adopting proactive mitigation strategies, stakeholders can promote trust and reliability within XAI systems, ensuring responsible and accurate outcomes.

Let's now move on to explore specific methods for conducting vulnerability analysis within explainable AI (XAI) models.

Conclusion

The exploration of "cxai short interest" reveals a critical need for rigorous evaluation of explainable AI (XAI) models. This analysis, akin to short-selling in financial markets, highlights potential vulnerabilities, biases, and inconsistencies within XAI systems. Key aspects examined include model fragility, susceptibility to manipulation, explanation reliability, and data integrity. The potential for inaccurate or biased outputs underscores the necessity for transparent and accountable models. Addressing these vulnerabilities, through meticulous data validation, bias mitigation strategies, and rigorous testing, is paramount to ensure reliable and trustworthy XAI deployments. Without this proactive approach, concerns about model credibility and potential misuse persist, echoing similar anxieties present in the financial sector.

Moving forward, a continued focus on vulnerability analysis, bias detection, and explanation reliability is essential. The ongoing development and refinement of XAI methodologies should prioritize ethical considerations, transparency, and accountability. Ultimately, the successful integration of XAI hinges on the ability to build models that inspire trust and ensure equitable outcomes in a multitude of applications. A comprehensive approach to "cxai short interest" analysis, therefore, remains critical to the responsible and effective utilization of explainable AI systems. Further research and collaborative efforts are needed to address the complex challenges and ensure the positive societal impact of XAI.

Article Recommendations